If you’ve ever built an automation that looked perfect, then failed at 2:07 a.m., you already know the hard part isn’t building. It’s trusting it. In this Zapier AI review, I’m focusing on two things that matter in real work, agent actions reliability, and the workflow time you can actually get back.

As of February 2026, Zapier is pushing harder into agent-style automation, not just classic “trigger then action” Zaps. That’s exciting, but it also raises a simple question, will it run cleanly when nobody’s watching?

What changed in Zapier AI for 2026 (and why “agent actions” feel different)

Zapier’s 2026 AI updates are less about shiny demos and more about making AI features usable daily. In the latest announcements, Zapier highlights an “Opus 4.6” update aimed at stronger multi-step handling and fewer mistakes. They also introduced 80 plus free AI agents you can customize in plain English, plus upgrades to the “AI by Zapier” app like model choices, shared prompts, and web data pull actions (based on Zapier’s own February 2026 update notes).

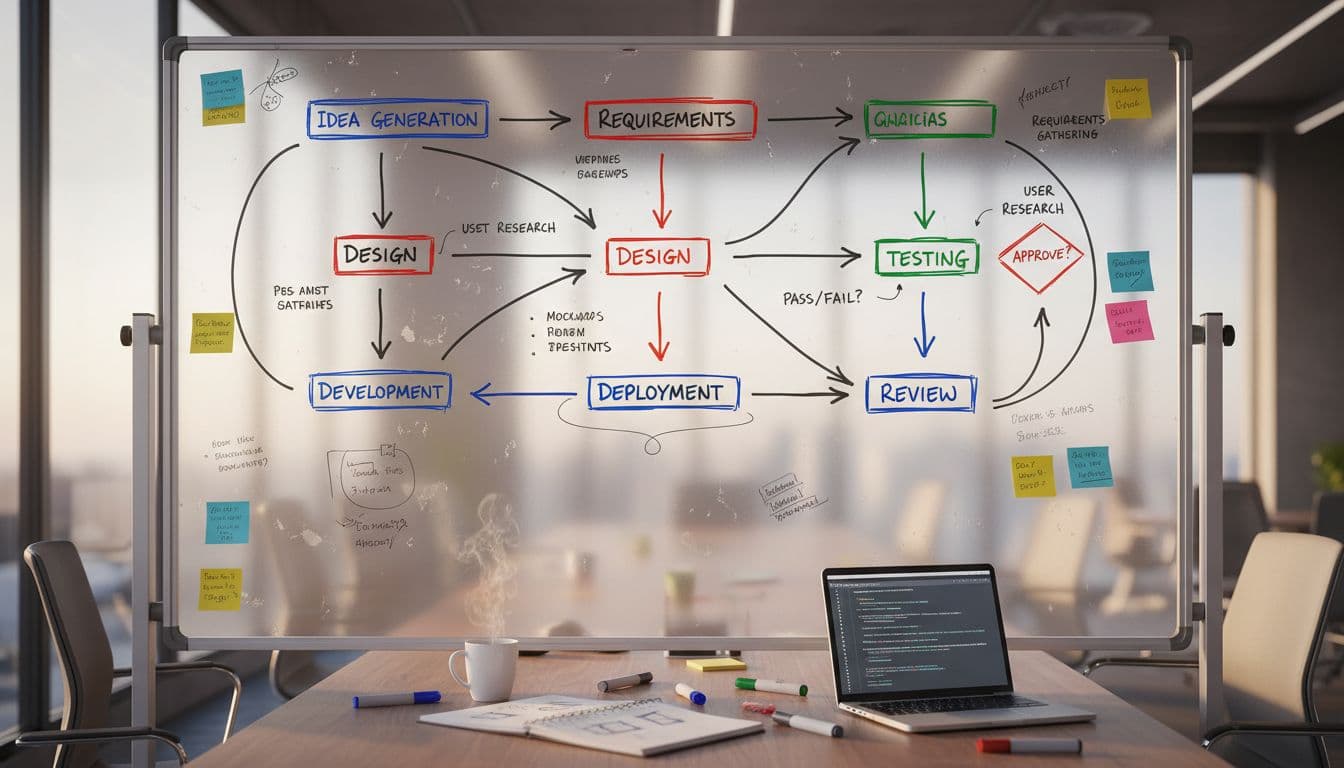

In plain terms, agent actions are meant to behave more like a helpful junior teammate. You give a goal, it decides the steps. A classic Zap is more like a vending machine, you pick the exact buttons.

That difference matters for reliability. When the AI “decides,” you have more places for things to go wrong, like picking the wrong record, formatting output oddly, or calling the right tool at the wrong time.

If you want context on Zapier’s broader automation direction this year, I found their roundup, the 8 best AI automation tools in 2026, useful because it shows what Zapier considers “table stakes” now.

Also, if you want my baseline view of Zapier’s strengths before the 2026 agent push, my older hands-on breakdown still holds up for core workflows, see Zapier Review 2025.

Zapier AI agent actions reliability: where it breaks, and how I sanity-check it

I judge reliability with one question: would I bet my Monday morning on it?

Agent actions can be solid, but they’re more sensitive to messy inputs than standard Zaps. The issues I see most often in agent-style builds (across tools, not just Zapier) come down to ambiguity and edge cases: unclear instructions, missing fields, duplicates, rate limits, or unexpected app permission prompts.

Here’s the simple checklist I use before I call an agent workflow “safe”:

- Make inputs boring: I force structured fields (dropdowns, labels, IDs) because free-text invites surprises.

- Add a “stop button”: I build a human review step for anything that sends emails, updates CRM stages, or touches billing.

- Test ugly data: I run blank names, weird time zones, emoji subjects, duplicate leads, and long threads.

- Watch the logs for a week: I want to see retries, timeouts, and where errors cluster.

- Lock prompts like code: I version prompts, because tiny edits can change outputs a lot.

The fastest way to lose trust in an agent is letting it message customers without a safety net.

If you’re comparing reliability across automation platforms, it helps to know what “more control” looks like. For complex branching and data shaping, I often point people to Make vs Zapier 2025 because it frames the tradeoff clearly: speed and simplicity versus deeper flow control.

One more reliability note: Zapier has said 97 percent of its builders use AI daily (in their 2026 update messaging). That tells me two things. First, the AI tools are getting easier to adopt. Second, more people are putting AI into production flows, which makes guardrails even more important.

Real workflow time saved: how I measure it (and what savings look like)

“Time saved” is a slippery claim because everyone counts it differently. So I measure it like this: minutes I no longer spend switching tabs, copying text, reformatting fields, or chasing status updates.

When I audit an automation, I do a quick before-and-after pass:

- Time the manual path twice, then average it.

- Count handoffs, every copy-paste, every “open app X.”

- Time the exception path, because failures cost more than successes save.

- Assign a dollar value if the task repeats often (even a rough one helps).

- Re-check after 2 weeks, once the workflow hits real data.

That exception path is the key. Agent actions can save a lot of time on good days, then give it back on bad days if you’re constantly fixing odd outputs.

To make this concrete, here’s a simple way I think about savings across common Zapier AI workflows.

| Workflow area | Manual work that disappears | Where agent actions help most | Main risk to watch |

|---|---|---|---|

| Lead follow-up | Copy details, write first email | Drafting personalized outreach | Wrong context, wrong tone |

| Support triage | Tagging, routing, summarizing | Summaries plus category guesses | Misclassification |

| Content ops | Turning notes into tasks | Turning briefs into checklists | Hallucinated details |

| Sales handoffs | Updating CRM fields | Filling missing fields from text | Bad field mapping |

| Reporting | Weekly status copy-paste | Summaries across tools | Data gaps |

The takeaway: agent actions tend to save the most time when the “work” is language-heavy (summaries, drafts, categorizing). They save less when you need perfect data movement.

For adoption trends, Zapier’s own survey write-up, 84% of enterprises plan to boost AI agent investments in 2026, matches what I’m seeing, teams want agents, but they also want controls.

If you want a broader comparison list while you shop, Vellum’s roundup, 15 Best Zapier Alternatives, is a helpful scan of options and categories (especially if you’re trying to place Zapier among agent and workflow platforms).

My verdict for 2026: who should use Zapier AI agents (and who shouldn’t)

This Zapier AI review comes down to a simple stance: I trust Zapier AI agent actions most when I treat them like assistants, not like autonomous operators.

If your workflow is customer-facing or money-facing, I’d keep a review step in place. On the other hand, if your workflow is internal, like summaries, task creation, routing, or drafting, agent actions can cut a lot of low-value clicks.

Zapier also looks more serious about making AI usable across teams, with shared prompts, model options, and ready-made agents you can modify in plain language. That lowers setup time, and it helps non-dev teammates contribute without breaking everything.

If you’re still picking your stack, I’d also browse my comparison hub, best AI automation tools 2025, then come back and decide what you need more, flexibility or predictability.

In the next week, pick one workflow you hate doing, automate it, then track exceptions like a hawk. That’s where the real time savings, and the real truth about reliability, shows up.