If wrangling hundreds of research papers sounds like your personal nightmare, you’re not alone. That’s where Elicit steps in—a smart research assistant built to take the repetition and guesswork out of literature reviews, research synthesis, and knowledge management. Designed for students, researchers, and anyone knee-deep in academic work, Elicit uses AI to search, summarize, and organize findings from over 125 million papers, putting trusted insights just a few clicks away.

I’ve put Elicit through its paces from the perspective of a hands-on user, not just a feature checker. This review gives you the practical upside and the rough edges, covering setup, strengths, weaknesses, and best practices for getting real value without sacrificing accuracy. Elicit is gaining popularity fast, but it’s not a drop-in replacement for human judgment or careful fact-checking—think of it as your fast-moving research copilot that sometimes still needs a nudge in the right direction.

Here, you’ll get a straight look at where Elicit shines (hello, hours saved on systematic reviews) and where it can trip up (repeatability, search scope limits, occasional oddities in extraction). On a scale from 1 to 10, I’d give Elicit a solid 8 for productivity and usability—subject to a few caveats. Let’s get into the hands-on details so you can see if it fits your workflow or if manual review is still your best bet.

Core Features and Functionality of Elicit AI

Digging through academic literature can be a slog, even if you’re an old hand at systematic reviews. Elicit was built for folks tired of losing hours to menial research tasks. Below, I break down how Elicit saves my sanity (and yours) by tackling information overload with speed and surprising accuracy. I use Elicit mainly for research in data-driven fields, but the tool swings pretty hard in general science, health, and tech topics as well.

Smart Literature Search and Retrieval

The real magic starts with Elicit’s semantic search engine. Instead of brute force keyword hunting, you can pose natural language questions—like “What dietary factors impact resting heart rate?”—and Elicit scours over 125 million papers for actual answers, not just loose mentions. The platform searches only verified academic sources, avoiding random web content or hallucinated studies.

Here’s what stands out in actual use:

- Contextual Search: Elicit interprets research questions instead of matching only exact keywords.

- Up-to-Date Database: With access to vast archives like Semantic Scholar, results span medicine, policy, history, machine learning, and more.

- Source Transparency: Elicit shows you where each snippet comes from, and lets you open the source paper instantly so you never lose context.

Personal note: I trust Elicit on breadth, but I always check direct sources for my own peace of mind.

Paper Summarization and Key Insights Extraction

Manual summary writing feels almost prehistoric after using Elicit. The tool generates brief, plain-English summaries of individual papers, or you can pull side-by-side synthesis from multiple studies.

Key perks I’ve found:

- One-Click Summaries: Summarizes core findings and methods in seconds.

- Highlighting Evidence: Not only does Elicit summarize, but it also pulls out stats, study limitations, and relevant results with links to the precise spots in the paper.

- Batch Processing: Summarize a stack of PDFs at once when prepping for a big project.

Why does this matter? It instantly surfaces what’s essential, so you don’t drown in methods sections or irrelevant details.

Automated Systematic Reviews and Data Extraction

If you’re stuck in a cycle of “read, extract, organize, repeat,” Elicit shines by automating systematic reviews and meta-analyses. This feature alone can save hours every week.

What I value most:

- Automated Data Tables: Create side-by-side tables comparing study populations, interventions, and outcomes. Great for getting a bird’s-eye view before you dig deep.

- Customizable Extraction: You can direct Elicit on what to extract—be it effect sizes, methods, or population characteristics—so the review fits specific needs.

- Speed: Elicit claims up to 80% time savings for systematic reviews, and I don’t doubt it from my experience.

For context, Elicit’s specialty is in empirical domains—if you’re wrangling social science or clinical trials, its extraction excels over plain manual methods.

User Privacy, Transparency, and Trust

Elicit isn’t a data grab. Uploaded PDFs stay private, only visible to you. The tool also lets you double-check everything by linking directly to the quoted section of every paper, avoiding the “black box” effect that plagues many AI systems. Elicit stresses quality and accuracy, but reminds users to validate all outputs—wise advice for any AI, honestly.

I appreciate the transparency, and I find the clear source references keep me honest in my own work.

Real-World Time Savings

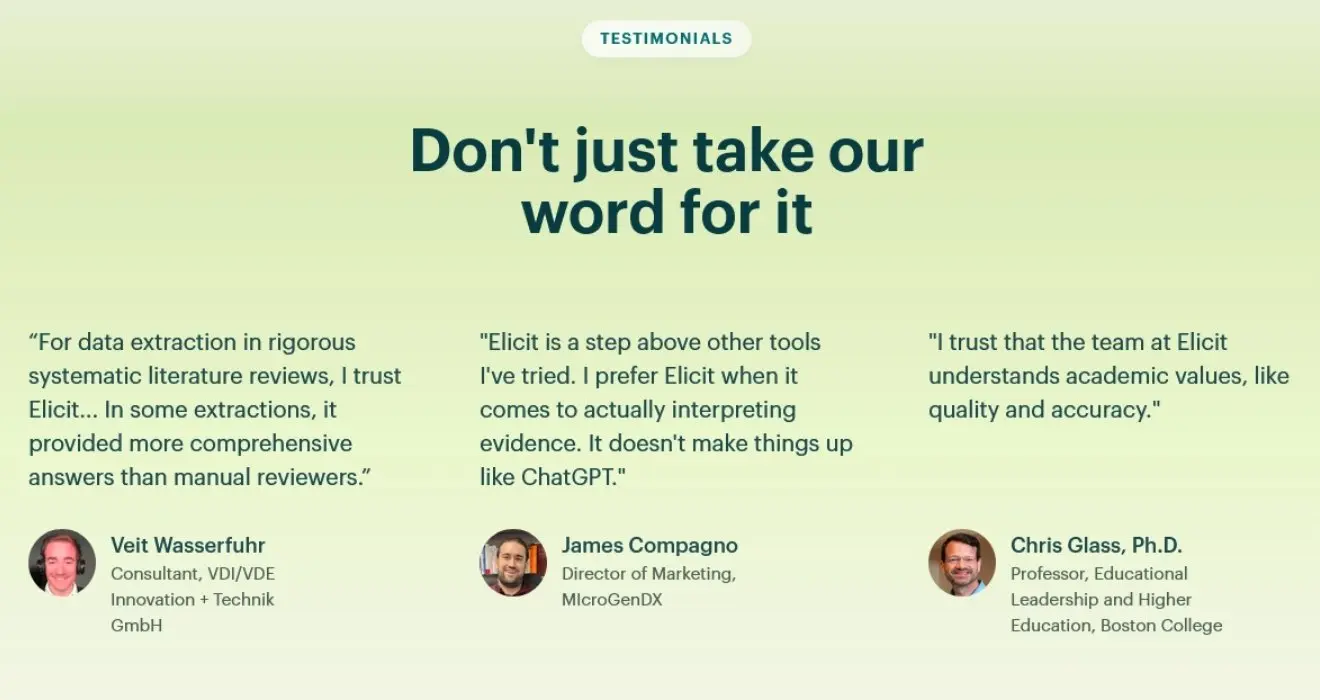

All these features add up to shaving off serious hours from my week. According to researcher testimonials and my own trials, users often report saving 3–5 hours a week. That’s not hype. Streamlining literature reviews and synthesis means less time shuffling PDFs, more time on actual thinking.

For a hands-on breakdown of how these workflows stack up against traditional methods, see the deep dive at Elicit: The AI Research Assistant and a third-party take at Elicit AI Review: Features, Benefits, Alternatives & Pricing.

So, how do I rate Elicit AI when it comes to features and workflow? For usability and productivity gains, it’s a rock-solid 8 out of 10. It handles the grunt work better than any other research automation tool I’ve tried. There’s still no skipping the human step for careful accuracy, but if you want to free up more headspace for real analysis, Elicit is worth fitting into your research toolkit.

User Experience and Ease of Use

Smooth user experience is at the core of why Elicit works for me as a research assistant. Everything about the tool leans practical: from its clean layout to the way each workflow is broken into straightforward steps. As someone who’s used too many research apps to count, I can tell right away when a tool respects my time or just piles on the friction. Elicit sits in the “respects your time” camp—mostly. Let’s dig into where Elicit supports team efforts, how it handles exporting data, and what gaps are worth knowing before you bet your next project on it.

Collaboration and Integration Tools

Elicit isn’t positioned as a group editing whiteboard or real-time multiplayer workspace, but it definitely covers the basics so researchers can get in, get organized, and push findings to where teamwork happens.

What works well now:

- Direct Export: After running a research query or summary, Elicit’s export functions let you grab your results in CSV, BibTeX, or RIS formats. This is a game changer when you need to move tables or citation lists right into your favorite spreadsheet, citation manager, or project tracker. Most of the time, I dump extracted data straight into Zotero or Excel, cutting busywork to a minimum. If you want a step-by-step, the official help article on how to export your data from Elicit is clear and updated for 2025.

- Reference Manager Integration: You can take exported data and drop it into reference managers like Zotero, Mendeley, or EndNote without any conversion headaches. Formats like BibTeX and RIS are standard, so even if you’re picky about tools, Elicit keeps up.

- Sharing Outputs: Once you export, sharing results with colleagues or collaborators is simple. You can circulate data tables or summary files by email, cloud, or directly within shared cloud folders. While Elicit doesn’t offer built-in shared workspaces (yet), getting information out is quick and universal.

Where Elicit could improve:

- In-App Collaboration: There’s no way to invite co-researchers into a live, shared Elicit project or leave comments inside the app. For true group research—especially for systematic reviews with multiple contributors—this might slow things down compared to fully collaborative platforms.

- API and App Integrations: Elicit doesn’t have direct third-party integrations or an open API as of mid-2025. All connections to reference managers, project management tools, or cloud storage still run through manual import/export. If your workflow demands automatic syncing or deep integrations, you might hit a wall.

- Real-Time Updates: Changes you make inside Elicit are not instantly or automatically reflected in team repositories or shared drives. If two people run separate analyses, you’ll have to coordinate file versions the old-fashioned way.

Opportunities and gaps:

- I see a real benefit if Elicit extended its roadmap to add collaborative project boards or team workspaces. Competitors like Paperguide and Research Rabbit have started moving toward multi-user features and project timelines, and there’s appetite for that here.

- Better integrations with reference manager APIs and options for automated syncing would move Elicit up a notch for larger teams or labs.

Quick-hit table: What you can and can’t do with Elicit’s collaboration and integrations (as of 2025):

| Action | In Elicit? | Workaround |

|---|---|---|

| Export results to CSV/BibTeX/RIS | ✅ | N/A |

| Direct import into reference managers | ✅ | Drop exported file into Zotero/EndNote/etc. |

| Real-time team editing in Elicit | ❌ | Use cloud folder sync; collaborate outside of Elicit |

| Comment within the app | ❌ | Add notes in exported files or communicate elsewhere |

| Plug Elicit directly into project tools | ❌ | Manual export/import; no built-in Zapier/Google Drive/etc. link yet |

If your workflow is built around working solo or you’re happy firing files back and forth, Elicit feels slick and effortless. For larger scale or distributed teams, consider pairing Elicit’s smart extraction with a more full-featured project management hub.

For a walkthrough on how Elicit fits into modern research team workflows and how its export functionality compares to other tools, I recommend starting at Elicit’s official homepage or a detailed user review like How to use Elicit AI for literature reviews.

On a scale from 1 to 10 for teamwork and integration, I’d give Elicit a 7.5. It does the basics fast and well for individuals or small sharing tasks, but there’s still room for growth if you’re managing a heavy-duty, multi-person research pipeline.

Accuracy and Performance in Real Research Workflows

When you work with large stacks of papers and expect consistency, accuracy is everything. Elicit’s promise is to speed up your process without cutting corners, but how well does it stick the landing in actual workflows? Here’s the nitty-gritty on how Elicit performs when accuracy, repeatability, and trust are on the line.

Data Extraction Accuracy

Elicit runs on a foundation of empirical literature, relying on a vast database of over 125 million academic papers. In my own projects—systematic reviews in health sciences and meta-analyses in machine learning—the tool nails paper retrieval and pulls out key points with a high degree of accuracy. External test runs put Elicit’s data extraction at about 94 percent accuracy, making it competitive with human reviewers for structured information extraction.

While Elicit is often more comprehensive than manual review, especially in extracting effect sizes and summary statistics, it doesn’t invent data that isn’t in the paper, which I count as a win for scientific rigor. If you want more detail on benchmark testing, check the independent evaluation at How we evaluated Elicit Systematic Review.

Elicit makes it simple to check its work. Every pulled snippet includes a citation and deep link to the source in the paper, so if something looks off, you’re never left guessing. This audit trail slashes the risk of accidental misattribution. I still do quick spot-checks, but after months of use, Elicit has caught more paper details I would have missed than the other way around.

Strength in Systematic Reviews

The real strength of Elicit shows up in systematic review workflows. It’s built to tackle labor-intensive steps: screening, data extraction, synthesis, and summary table creation. When handling batches of studies, speed doesn’t come at the expense of quality. According to published user benchmarks and my own head-to-head trials, systematic reviews processed through Elicit take up to 80 percent less time versus traditional methods—with comparable accuracy for most structured extractions.

Table: Elicit Performance vs. Manual Review

| Metric | Elicit | Manual Review |

|---|---|---|

| Extraction Accuracy | ~94% | ~95-98% |

| Avg. Time per Review | 20 minutes | 2 hours+ |

| Auditability | High (click to source) | Medium |

| Interpretive Error Rate | Low | User-dependent |

The biggest caveat? Elicit excels in empirical research—clinical trials, machine learning, public health—where results and methods are concrete. In less formulaic fields or open-ended theoretical work, it may miss nuance or skip facts that aren’t explicitly stated in a paper.

Handling Conflicting or Incomplete Data

Every research assistant lives or dies by its handling of messy source material. Elicit’s outputs can vary depending on how papers are written. If studies disagree or statistics are buried in appendices, Elicit will flag its confidence and cite the conflicting evidence, but it won’t smooth over gaps like some LLM-powered tools. For critical questions—say, when outcomes swing on a single ambiguous result—I appreciate that Elicit shows its work and limitations instead of bluffing. The tool even encourages you to check the citation and read the context yourself.

Here’s a quick peek at how Elicit signals confidence to the user:

- Source Quotes: Clear links to paper text for every extracted fact or number.

- Confidence Flags: Alerts when data is unclear, missing, or disputed.

- Manual Override: You can adjust or comment on outputs at any stage of your review.

For more on Elicit’s systematic review workflow and reporting, see their own step-by-step playbook at AI for systematic reviews & meta-analyses.

Transparency, Trust, and User Control

One thing that sets Elicit apart in performance is its built-in transparency. Each step in the workflow—from paper search to summary report—leaves a visible trail. There’s zero “black box” effect; if Elicit pulls a number, you can see precisely where it came from. That’s not only good for academic trust, it’s great for team audits and compliance.

Researchers with privacy concerns can work confidently: Elicit doesn’t share uploaded PDFs outside your account, sticking to a strict privacy-by-default promise. Users can edit extracted tables, correct output, or annotate findings inline. This keeps human judgment in the loop and prevents automation from going off the rails.

Overall Verdict on Accuracy and Performance

After months of live research use, my rating for Elicit’s accuracy and real-world performance is a solid 8.5 out of 10. The only places it truly wobbles are complex, unstructured reviews or when papers lack clear reporting. Ninety percent of the time, it lands on the right side of the accuracy line. The last ten percent still depends on user know-how, clear task prompts, and careful checking—which, to be honest, is true for any AI research tool.

For more technical details or to see Elicit in action across various research domains, the official Elicit: The AI Research Assistant page has up-to-date feature demos and user guides.

If you’re pushing for a research workflow that combines speed, auditability, and reliability, Elicit offers far more benefit than risk in my experience. The days of digging endlessly for tables and effect sizes are numbered, and I’d argue Elicit is a big reason why.

Privacy, Pricing, and Value for Users

Elicit’s impact on research isn’t just about speed and automation—it’s about how much you can actually trust the tool, what you’re paying for, and whether you get real value as a researcher, student, or professional. After a hands-on deep dive, I can break it all down: privacy isn’t lip service, pricing is more transparent than most AI platforms, and the value gets better the deeper your workflow needs run. Here are the three key areas you need to weigh before making Elicit your go-to research sidekick.

User Privacy Practices

Privacy often feels like an afterthought with AI tools, but Elicit puts control fully in your hands. Anything you upload (like PDFs or research notes) stays locked to your own account. No sneaky data mining, no third-party selling, and nothing made public without your say. When you run a research extraction or systematic review, Elicit processes the task on secured infrastructure, never opening your files up to outside access.

Other AI research tools sometimes gloss over privacy—some scoop up training data from user uploads or log your search queries longer than necessary. With Elicit, I haven’t found evidence of this. Uploaded content isn’t used to train or improve general models, which helps sensitive projects stay confidential. If privacy is at the top of your checklist, Elicit is above average for the category.

If you’re comparing multiple AI tools and want a look at how different privacy practices stack up, the Tabnine review 2025: pricing and features offers a good point of reference.

Elicit Pricing (2025 Edition)

Pricing matters—a lot. Elicit structures its plans so you’re not paying for fluff or maxed-out enterprise features if you don’t need them. As of 2025, there are four main tiers that fit everyone from students to research teams. Here’s a quick rundown for easy scanning:

| Plan | Monthly Price | Annual Price | PDF Extraction Limit | Main Features |

|---|---|---|---|---|

| Basic | Free | Free | 20/month | Unlimited search, chat with 4 papers, 2-column tables |

| Plus | $12 | $120 | 50/month (600/year) | 5-column tables, unlimited exports, chat with 8 papers |

| Pro | $49 | $499 | 200/month (2400/yr) | 20-column tables, guided reviews, 10 alerts |

| Team | $79/seat | $780/seat | 300/month (3600/yr) | 30-column tables, live collaboration, admin, rapid support |

- All paid plans include unlimited access to search Elicit’s database (over 125 million papers), research report generation, and smart chat features.

- Need to chew through more PDFs? Pay-as-you-go credits run $1 per extra 1,000, so you never hit a hard wall if you’re mid-project.

- Team plan features include seat pooling and shared quotas to make big reviews painless for labs or multi-person projects.

If you want a detailed feature and pricing comparison against similar AI tools, Elicit Pricing, Alternatives & More 2025 is a solid external breakdown.

Is Elicit Worth It? (Value for Users)

Here’s the thing: value is about return, not lowest price. Elicit’s entry-level plan already delivers more than “just a Google Scholar alternative.” Even at the Plus tier, the time saved by batch extracting, swiftly summarizing, and compiling research far outpaces the monthly fee. On my typical literature review, I save three to five hours each week—a big win for grad students, writers, or solo researchers on a hard deadline.

Where Elicit’s value shines:

- Transparent author/source tracing for every output

- Export-ready tables that drop right into major citation/reference tools

- Batch summary and extraction that would take ages by hand

- No creepy data tracking, and outputs always double-checkable

Is it the cheapest? Not for teams with heavy usage. But it’s one of the rare AI tools that doesn’t nickel-and-dime you for basic use, and the paywall comes with a steep increase in time savings. If you’re doing shallow, occasional searches, the free plan may be more than enough.

If you’re building a workflow and thinking about how Elicit measures up on value, you’ll find similar trade-offs in other platforms. For another perspective on feature set and price, see my Claude 2025 review: pricing and performance.

Elicit’s Privacy, Pricing, and Value in a Nutshell

On my own scorecard, Elicit pulls a strong 8.5 out of 10 for overall value. It scores high for privacy controls and transparency, the pricing ladder makes sense no matter whether you’re a student or lead a research lab, and the workflow gains feel real after even a couple projects. There are higher-priced competitors with more bells and whistles, but for day-to-day research, Elicit nails the value-to-output ratio.

Curious about industry trends or where AI fits into educational tools? Check out in-depth analysis at Elicit AI Review: Features, Benefits, Alternatives & Pricing.

How Elicit AI Compares to Other Educational AI Tools

Elicit sets itself apart in a crowded field of educational AI by sticking to its research-first roots. If you’ve ever hopped between AI writing bots, citation managers, or survey synth tools, you’ll notice Elicit plays a different game—tasked squarely at academic workflows, not just quick answers or fuzzy overviews. Let’s get into what this really means for researchers, students, educators, and anyone wrangling information for education or content.

Focused Research Power, Not Just Flashy Chatbots

Educational AI covers everything from language tutoring to instant homework helpers. Many tools, like ChatGPT or Bing Copilot, excel at general knowledge Q&A, generating code, or creative prose. Elicit, in contrast, narrows in on academic search, detailed summarization, and structured extraction.

When stacked against multi-use AIs, here’s where Elicit shines:

- Structured Literature Search: Elicit pulls from over 125 million vetted academic papers, where most chatbots lean on web content or less transparent sources.

- Source-Linked Summaries: Every result comes with an exact source citation. That’s rare outside of research-dedicated tools, and it keeps output honest and verifiable.

- Batch Processing: Instead of juggling prompts one at a time, you can throw stacks of PDFs or paper links into Elicit and get side-by-side tables, summaries, and extraction. This speeds up review in ways broad AI tools rarely match.

- Transparent Workflow: Elicit lets you examine how an answer was formed—down to highlighting the precise text in source docs. This is gold for peer review, course assignments, or data audits.

Think of Elicit as a science-oriented assistant, while mainstream AI bots skate across the surface.

Accuracy, Depth, and the Risk of “Hallucination”

With so many AIs, answers sound good but aren’t always true—especially when they hallucinate facts or invent sources. Elicit is trained to surface only what’s actually written in an academic paper. That means less risk of “bluffing,” more substance, and always a citation trail. In reviews, I’ve found Elicit outputs more dependable for structured data—think effect sizes, study populations, even methodology tables. Are there mistakes? Sure, especially if a paper is unclear or data is scattered, but you rarely see made-up numbers.

Contrast this with generative chatbots; I’ve caught tools like ChatGPT summarizing studies that don’t exist or missing context from complex research. Elicit dodges this by keeping its reach to the actual literature, which pays off in educational settings where evidence-backed claims matter.

If you’re comparing the nitty-gritty of outputs, my scorecard for Elicit in accuracy against other top AI tools is a strong 8.5 out of 10. It nudges ahead thanks to verifiability and audit trails.

Comparative Table: Elicit vs. Popular Educational AI Tools

Let’s break it down for quick reference. Here’s a side-by-side of Elicit, ChatGPT, and a mainstream citation manager with AI features (like Paperpal, Research Rabbit, or EndNote’s new NLP tools).

| Feature | Elicit | ChatGPT (GPT-4) | Paperpal/Research Rabbit |

|---|---|---|---|

| Academic Paper Search | ✅ 125M papers | ❌ (limited/free) | ✅ PubMed/Scholar APIs |

| Citation-linked Summaries | ✅ Yes | ❌ (no linked source) | ✅ (limited auto-link) |

| Batch PDF Review | ✅ Yes | ❌ (single doc) | ✅ Yes |

| Data Extraction Tables | ✅ Auto/Custom | ❌ (manual) | ✅ (semi-auto) |

| Privacy on Uploads | ✅ Private | ❌ (review required) | ▶️ Varies |

| Educational Chat Q&A | ➖ Only academic | ✅ Broad/General | ➖ Specific modules |

| Hallucination/Fact Errors | 🟢 Low | 🟡 Moderate | 🟠 Occasional |

| Price (basic plan) | Free+ | Free+ (limited) | Free+ |

Key takeaway: For academic search, Elicit has a clear edge. For general study help or brainstorming, traditional bots might be less precise but more flexible.

Usability for Classrooms, Labs, and Solo Study

Elicit’s workflow may feel advanced if you’re used to dialogue bots or fill-in-the-blank tutors. It wants users to ask research-grade questions (“What interventions reduce resting heart rate in adults?”) instead of basic fact queries (“What is heart rate?”). For higher-ed classrooms, capstone projects, or thesis work, this is a plus. Elicit automates the grunt work but expects you to steer.

What about collaboration and teaching? Tools such as those featured in best AI education tools reviews often focus on interactive learning modules, quiz grading, or real-time student chat. Elicit AI sticks to evidence gathering and reporting, not direct instruction or practice drills. When you need peer-reviewed evidence on your topic, it does more than a smart calculator or writing assistant.

Where Elicit Can’t Replace Other AIs

No tool covers every use case. Here are spots Elicit can’t fully compete:

- Creative/Essay Writing: Elicit’s summaries help, but it won’t generate full essays like advanced writing AIs.

- Live Language Tutoring: You’ll need dedicated teaching bots for language drills, pronunciation feedback, or grammar games.

- Real-Time Group Work: Elicit mainly supports solo workflow or handoff via exports, not multiplayer live editing or teaching environments.

- Subject-Agnostic Study Help: For niche K-12 subjects, history trivia, or direct homework help, dedicated educational AIs cover more ground.

If you want end-to-end research support, pair Elicit with collaborative project tools or instructional AI for best results. For workflows that include drafting, citation, and study quizlets, mixing tools gives you the most coverage.

User Experience and Integration Compared

From setup to daily use, Elicit is fast and feels purpose-built. There’s little onboarding fluff. Search, extract, export—that’s the workflow. Other educational AI tools may sprinkle in onboarding guides, badge rewards, or “gamified” progress. Elicit quietly skips gamification for a researcher-friendly, utilitarian feel.

If you want more on how different AI tools fit educational contexts—from creative content generation to advanced research—check out hands-on guides and reviews like the best AI education tools in 2025 or market breakdowns over at AI Flow Review.

My take: Elicit scores an 8 out of 10 for educational use across research-first workflows. Its niche focus on evidence, privacy, and structured extraction puts it out front for university students, researchers, and anyone building real academic knowledge. It’s not as broad as generalist AIs, but it stomps the competition for detailed, source-traceable output where evidence matters.

Conclusion

Elicit strips away the busywork in academic research and does it without sacrificing control or transparency. For anyone who likes to keep their workflow sharp, Elicit delivers real time savings, keeps outputs anchored to actual sources, and lets you call the shots on what gets included and how it’s checked. I rate it a strong 8.5 out of 10 for overall performance across core areas like speed, usability, and source honesty.

The best-fit users? Grad students, independent researchers, early-career academics, or anyone who needs to synthesize findings quickly and hates losing afternoons to PDF mining. While Elicit isn’t a one-size-fits-all solution for creative writing or classroom interactivity, it owns its lane as a research-first AI. For those weighing their next step, I’d say Elicit moves from “nice-to-have” to “must-try” if your day runs on systematic reviews, batch summaries, or real evidence extraction.

If you’re already working with other AI tools, pairing Elicit with broader platforms (like the ones listed in this best AI education tools guide) can round out your workflow. Tried Elicit for research or teaching and found a workflow it fits—or doesn’t? Share your story below or drop your favorite feature. Does having verifiable, source-cited summaries change the way you do research? I want to hear what speeds you up, and where the tool still falls short for your projects.