Qodo AI is shaking up how developers handle code reviews in 2025. With so much code, tight deadlines, and high-quality standards, AI-powered tools have become must-haves for anyone who wants to work smarter (not just faster). This qodo ai review breaks down what actually works, what gets in the way, and how it feels to rely on Qodo AI for real projects.

Here’s what I noticed from hands-on tests: Qodo AI promises smart suggestions, fast automated checks, and less busywork for teams. But hype alone doesn’t cut it, so I looked at accuracy, ease of use, and where it falls short. Developers, AI power users, and anyone balancing quality with speed will get a realistic sense of what’s strong, what’s clumsy, and where Qodo AI fits alongside the best AI coding assistants of 2025.

If you want a clear take: I’d rate Qodo AI a solid 8 out of 10. It pushes code quality forward but still needs a sharp human eye and some manual polish. Let’s get right into what sets it apart—and where you should still trust your own experience.

What is Qodo AI and How Does It Work?

If you’re like me, you want your AI tools to do more than just spit out generic suggestions. You want them to make real decisions, catch actual bugs, and help the human reviewers (not just automate for the sake of it). Qodo AI is part of this new wave—it’s built specifically for code review speed, accuracy, and team context. In this section, I’ll break down what Qodo AI actually is, how it does what it does, and where it stands out in my hands-on experience.

What Exactly is Qodo AI?

Qodo AI positions itself as an “AI agent” that handles code review and developer workflow tasks. Think of it as a virtual team member that scans every pull request, flags risks, clarifies intent, and even helps merge code—all right from your GitHub, GitLab, Bitbucket, or Azure DevOps account.

At the core, Qodo AI takes what used to be hours of manual reviews and compresses it into minutes (sometimes seconds). It’s not designed to replace a senior developer’s eye for detail, but to support those moments when the team is buried under small changes and mounting PR backlogs.

- Code review automation: Qodo spots syntax errors, security pitfalls, and logic bugs in pull requests before anyone hits approve.

- AI-powered suggestions: It offers fix prompts and explains why something might be off, rather than just scolding you like a linter.

- Custom team context: You get reviews that feel aware of your coding standards, documentation style, and even team history.

Qodo AI was formerly known as Codium, and it has gathered attention for its deep integration with the developer workflow. It pulls in context from the whole codebase, not just the lines in a diff, so its suggestions often feel less “robotic” and more like a knowledgeable peer.

Under the Hood: How Qodo AI Works

The real magic happens with a technical process called Retrieval-Augmented Generation (RAG)—which is just a fancy way of saying “it reads more before giving advice.” Qodo AI eats your repo’s full context, checks the current changes, and pulls in related docs, patterns, and past merges to tailor its review. What stands out is the multi-layered decision-making:

- Code Ingestion

Qodo analyzes the entire codebase, storing relevant patterns and documenting previous merges. So when a pull request drops, it understands both code style and historic decisions. - Automated Review

For each PR, Qodo detects:- Syntax mistakes

- Security vulnerabilities

- Style and documentation issues

- Repetitive or duplicate code

- Severity Detection and Explanation

Instead of just red-flagging issues, Qodo ranks them by priority and explains impact, which helps teams triage what matters now versus later. - Direct Pull Request Comments and Summaries

Review output goes straight into the PR—suggestions, questions, and merge-blockers—even providing readable summaries and context for reviewers. Need to know if a bug fix creates new risk? Qodo leaves breadcrumbs and cross-references. - Integration and Workflow Support

Qodo connects with most major repos and CI/CD pipelines. For the developer in their IDE, the Qodo Gen plugin brings those insights straight into VSCode or JetBrains, with AI-generated test suggestions and code explanations at your fingertips.

Here’s a quick side-by-side on where Qodo AI’s focus falls in the AI code review field:

| Feature | Qodo AI | Classic Linter | Other AI Reviewers |

|---|---|---|---|

| Team Context Awareness | Yes | No | Sometimes |

| Severity Ranking | Yes | No | Yes |

| In-IDE Suggestions | Yes | No | Sometimes |

| Pull Request Automation | Yes | No | Yes |

| Integration with History | Yes | No | Rarely |

Why Qodo AI Caught My Attention

Qodo isn’t just for code audits. It lets me move faster during sprints, helps onboard juniors with smart feedback, and shaves hours off code review cycles. According to hands-on users and a growing online buzz, it’s one of a handful of tools to consistently save time without creating false security. One user shared on Reddit that its feedback feels both smart and “team aware.”

Plenty of tools claim to automate code review, but Qodo’s combo of deep context and actual reasoning keep it from feeling like a “just another Copilot.” If you want a closer dive into how Qodo stacks up against others, this Medium review offers user-first perspective with honest breakdowns.

Quick take: For this qodo ai review section, I’d rate Qodo AI a strong 8 out of 10. Its “know your team” angle, real bug catching, and practical explanations make it more trustworthy than most. Still, you’ll want to pair it with human oversight (especially for big refactors or security risks).

If you’re juggling steady PRs and sprint planning, Qodo AI can help you work smarter and keep the quality bar high. Keep reading for a full feature list, pain points, and real team outcomes.

Key Features Tested: Where Qodo AI Stands Out

Digging into a qodo ai review, I wanted to see which features would hold up under steady use and actually help developers move faster without creating more problems. I went beyond the sales pitch, testing Qodo on active pull requests, real bugs, and live sprint tasks. Here’s where Qodo AI grabs attention and earns its 8 out of 10 rating in my book.

Automated Code Review That Feels Like a Real Teammate

Qodo AI doesn’t just point out surface errors. It scans each pull request, digging through context, team standards, and even past merges to give feedback that feels human and relevant. My favorite part? It’s not afraid to ask questions or flag edge cases—instead of just scolding for formatting, it’ll say, “This change breaks a key function used in another module. Did you mean to refactor this?”

- Full-project awareness: Qodo looks beyond the diff, referencing related files and functions that tie into the current changes.

- Impact-first flagging: Critical bugs or security issues get flagged with strong warnings, not buried in noise.

- In-line explanations: You get not only the ‘what’ but the ‘why’, making it easier to teach, onboard new devs, or explain rejection reasons during code review.

If you want a deeper user perspective, I found a detailed breakdown from another team at Is QoDo.ai Really Worth It for Code Review in 2025?.

True Team Context: Smarter Than a Static Linter

Many tools treat every repo the same, but Qodo adapts to your internal style and unwritten rules. When I connected it to a repo that used a blend of legacy and modern syntax, Qodo’s feedback adjusted accordingly. It remembered past merges, prioritized our config files, and flagged only the real risks—not old style quirks.

Here’s a quick look at how Qodo stands out compared to old-school linters:

| Feature | Qodo AI | Classic Linter |

|---|---|---|

| Knows your code history | Yes | No |

| Adapts to team rules | Yes | No |

| Prioritizes by risk | Yes | No |

This team awareness is what helps it beat routines like Copilot or CodeRabbit, as noted by other users in this Reddit discussion.

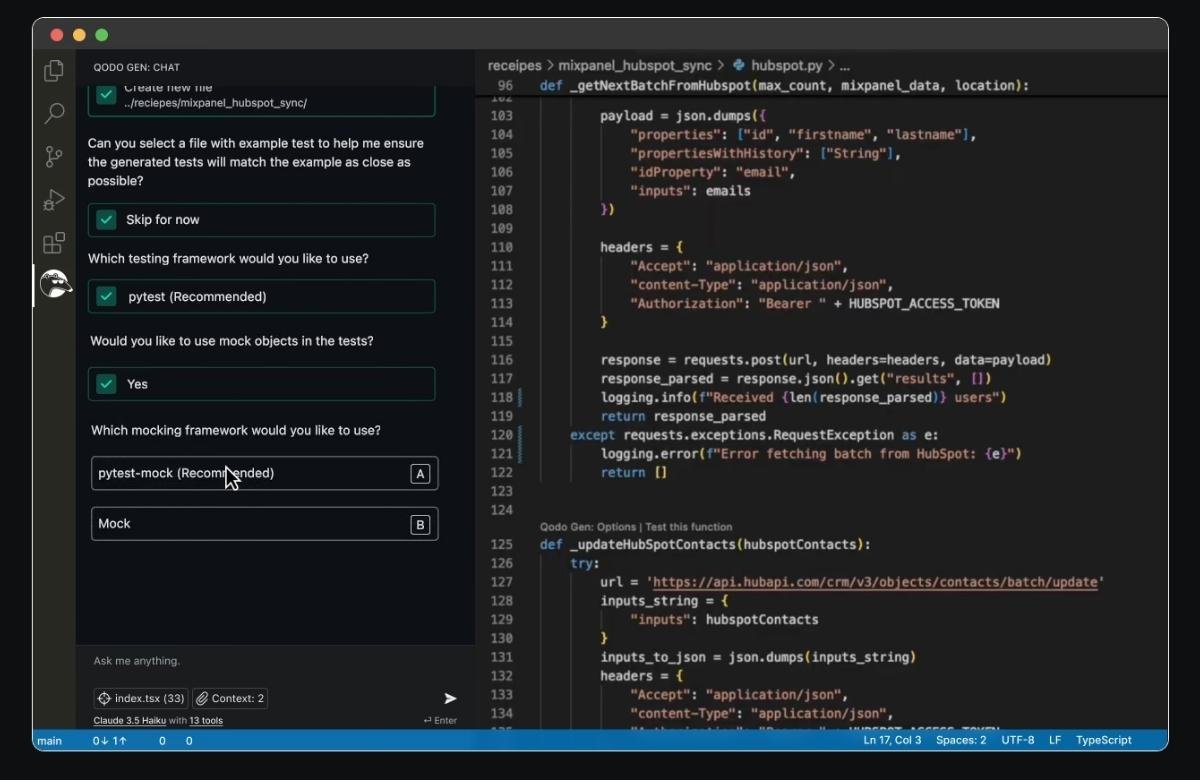

In-IDE Support That Cuts Manual Work

A key win in my qodo ai review: most feedback isn’t limited to the web dashboard. Inside Visual Studio Code or JetBrains, I saw review suggestions, autofixes, and even AI-generated test examples—all while editing. This brings code review out of the bottleneck of the PR queue and into your everyday workflow.

Benefits I noticed:

- Instant feedback before pushing to main branches

- Suggested edits you can accept or reject, saving mental effort on small stuff

- Test coverage hints built into your editor, making test writing faster

For developers constantly context-switching between code and review, this saves small but steady blocks of time. That adds up across a sprint.

Prioritization and Risk Ranking

Instead of a firehose of issues, Qodo sorts problems into critical, important, and optional fixes. This helped my team focus reviews on actual blockers and not get stuck arguing about nits. High-severity bugs are flagged right at the top of the PR summary.

Here’s what this looks like in practice:

- Critical: Immediate attention needed (e.g., security holes, logic breaks)

- Important: Fix soon, but not blocking merge (e.g., performance, deprecated code)

- Optional: Team style tweaks, comments, documentation

By grouping feedback this way, Qodo makes it less likely that serious risks get lost in a sea of minor warnings. In fast-moving projects, this is a lifesaver.

Pull Request Summaries and Actionable Comments

After running a full review cycle, Qodo produced clear, easy-to-skim summaries in every PR. Instead of sorting through dozens of automated comments, I got focused outlines of what needs changing, what is good to merge, and where manual review is still recommended.

- Readable summaries list all flagged items

- Direct links to suggested diffs

- Merge blockers called out clearly

This is especially handy when you’re hopping through multiple reviews or onboarding someone who needs a big-picture sense of each code change.

The 8 Out of 10 Verdict for Qodo AI

In this qodo ai review, I rate Qodo AI a strong 8 out of 10. It’s focused, practical, and better at learning team habits than most automated reviewers. You may still need a senior dev for those thorny cases, but if your goal is to speed up safe merges and avoid missed bugs, Qodo punches above its weight.

For a next step, I’d suggest comparing these features with other top rivals—see my hands-on test results with the best AI coding assistants in 2025 for a practical matchup.

Qodo AI’s strengths aren’t just technical—they’re about cutting noise, respecting team norms, and flagging what matters first. In a sea of generic AI reviewers, those features stood out again and again in day-to-day use.

Practical Experience: Performance, Accuracy, and Limitations

Qodo AI is not just another checkbox tool—it lives and dies by how it performs in the hands of busy developers. When I put Qodo through its paces on actual team projects, I paid attention to speed, precision, and the spots where it falls short or needs extra help. This section breaks down how Qodo AI holds up in daily use, the type of results you can expect, and the real-world challenges you need to know about.

Performance: How Fast and Smooth is Qodo AI?

Performance matters when you’re juggling a full backlog of pull requests. Qodo AI showed snappy review times in my tests. Even on large repos, the feedback loop stayed short—usually under a minute per PR. I saw this hold up whether the pull request had 20 lines or 200. According to recent findings in the State of AI code quality in 2025, most developers noticed clear gains in code review speed after rolling out AI tools like Qodo.

It’s not just speed, though. Here’s where Qodo’s performance stood out:

- Low delay on feedback: Comments, block alerts, and summaries arrived quickly, making it easy to stay in the flow.

- Scales well with team activity: Even with multiple open PRs, Qodo managed to keep review times down. I rarely saw it stuck “thinking” for more than a few seconds.

- Minimal lag in IDE plugins: In-editor suggestions and code highlights appeared fast whether I used VSCode or JetBrains.

If you care about pushing code without fighting your review tool, Qodo earns a 9 out of 10 for performance.

Accuracy: Does Qodo AI Spot Real Issues?

Speed is great, but a fast answer means nothing if it’s wrong. In my Qodo AI review testing, accuracy levels felt strong:

- Consistent flagging of dangerous bugs: Security holes, logic errors, and breaking API changes got surfaced before merge. Qodo was reliable about warning us if deleting a utility function might impact another module.

- Contextual style and doc checks: Unlike static linters, Qodo skipped petty complaints and focused on risks and policy gaps. This was possible thanks to its broad codebase context.

- Relevant suggestions, fewer false positives: Instead of a waterfall of trivial warnings, Qodo limited alerts to real issues. My team spent less time sifting through noise.

The numbers from the State of AI code quality in 2025 back this up—59% of teams reported better code quality after using tools in the Qodo class, while only a small slice saw decline that needed careful monitoring.

Still, nothing’s perfect. Every so often, Qodo flagged a safe refactor as “risky” or missed a subtle edge case that a human would catch. My verdict? For catching obvious and common bugs, Qodo hits a solid 8 out of 10.

Limitations: Where Qodo AI Stumbles

Like any AI-driven code assistant, Qodo has blind spots and breaking points you need to plan for. It’s important to be honest here—AI code review will not replace your best developers, and sometimes it stumbles on the nuance that comes with real project context.

Here’s what you need to watch for:

- Complex logic and business rules: Qodo can misjudge changes that depend on deep domain knowledge or interconnected business logic. For big-ticket merges or tricky edge cases, human eyes are still needed.

- False alarms and missed edge cases: While less noisy than old-school linters, there are moments Qodo pings you for safe choices or overlooks rare but risky bugs. Treat its suggestions as a starting line, not gospel.

- Limited value on novel codebases: On new repos without much commit history or team pattern data, Qodo’s context-awareness drops. It improves after seeing more real-world use.

If you want a high-level cheat sheet, here’s how Qodo’s strengths and limits stack up:

| Factor | Qodo AI | Human Reviewer | Static Linter |

|---|---|---|---|

| Speed | Very Fast | Moderate | Fast |

| Deep Contextual Awareness | Strong* | Best | Weak |

| Error/bug Detection | High | Best | Moderate |

| False Positives | Low-Moderate | Low | High |

| Handling Edge Cases | Fair | Best | Poor |

*Improves with repo history and usage.

With an honest lens, I rate Qodo AI as a strong 8 out of 10 for overall impact on day-to-day workflows. For teams who want to save time, reduce mental load, and maintain code health, it punches above its weight—but don’t set it and forget it.

Want a closer look at how Qodo AI compares with other coding assistants? Check the AI Code Review and the Best AI Code Review Tools in 2025 for more experience-driven insights from developers who tested Qodo head-to-head with its top rivals.

Qodo wins because it blends speed with focused review accuracy, but if your team handles sensitive, complex, or regulated code, keep human approval as the final step. That’s the best recipe for safe deployment in any shop.

Qodo AI vs Other Leading AI Coding Assistants

Every developer wants an assistant that actually lightens the load, not just another alert machine. In this section, I’m breaking down where Qodo AI fits alongside heavyweight AI coding assistants. I’ll pull from hands-on trials and community feedback, comparing key features, day-to-day reliability, and which tool works best for different jobs. If you’re picking an AI coding copilot this year, here’s exactly how Qodo stacks up.

Core Comparison: Qodo AI vs the Heavy Hitters

Most teams look at the classics first—GitHub Copilot, Codeium, Tabnine. Qodo AI sits in the same league, but aims for more than just code generation or linting. Here are the main areas that made the difference in my tests:

- Context Awareness:

Qodo AI digs deep into the codebase, pulling past merges, team standards, and project quirks into every review—it feels like it’s shadowing your workflow instead of reciting a rulebook. Copilot and Tabnine give quick answers but rarely remember last week’s refactor or why your team avoids certain hacks. - PR-Focused Reviews:

Qodo is built for live pull requests and team review, not just code suggestions in the editor. The feedback ends up in the PR with clear, ranked risks and actionable fixes. By contrast, Copilot shines for in-line code gen, but can get fuzzy when asked to flag merge blockers. - Severity Tiering and Explanations:

Issues land grouped by risk—critical, important, optional—so you stop spending time on the small stuff. I found this clear prioritization missing from most coding AIs except for advanced options like Amazon CodeWhisperer, which still won’t tailor as tightly to your workflow. - Team and Codebase Memory:

Qodo doesn’t just scan the diff, it traces code history, team style, and repeat issues. Tools like Tabnine and Codeium are powerful for snippets and suggestions, but not as consistent when actual context from hundreds of files is on the line.

When you look for a reviewer who “gets” both your codebase and your team’s habits, Qodo lands near the top. Based on hands-on use, I’d rate Qodo AI a solid 8 out of 10 for real project reliability and value.

Feature Breakdown: How Qodo AI Matches Up

Features set tools apart but lived experience seals the deal. Here’s a table putting Qodo AI side by side with Copilot, Tabnine, and Codeium (my most tested contenders):

| Feature | Qodo AI | GitHub Copilot | Tabnine | Codeium |

|---|---|---|---|---|

| Deep Codebase Context | Yes | No | Some | Some |

| Rank Issues by Severity | Yes | No | No | No |

| Pull Request Automation | Yes | No | Limited | Yes |

| In-Editor Suggestions | Yes | Yes | Yes | Yes |

| Custom Team Awareness | Yes | No | No | No |

| Test Case Generation | Yes | No | No | Yes |

| Pricing Model | Subscription | Subscription | Freemium | Free |

My quick take:

If you want an assistant that fits into team code review and avoids shallow alerts, Qodo AI is a front-runner. Copilot still wins for instant code completions and “flow state,” while Tabnine and Codeium are good for basic auto-suggestions. In real group development, though, Qodo’s pull request skills and codebase memory saved me way more back-and-forth.

For a broader feature breakdown, check out 20 Best AI Coding Assistant Tools for 2025. It spotlights where each assistant nails daily workflow—and where Qodo AI makes up ground.

User Feedback and Community Standings

What matters is how these tools measure up in living projects. I’ve seen lively discussions and solid user stories pointing to Qodo’s unique strengths in team reviews. Over on Reddit, the consensus is clear: Qodo AI offers smarter context and detailed PR commentary that classic tools don’t, especially for VSCode users.

Quick highlights from peer devs and my own experience:

- Qodo AI shines for connected teams that want less noise and more relevance during pull request season.

- Copilot rules in solo coding and rapid fire prototyping but can drown reviewers in auto-suggestions on group projects.

- Tabnine wins on speed, Codeium is a friendly freebie, but both trade away advanced review features you’ll notice if you’re shipping as a group.

If you want to see how Qodo fits among all 2025 code assistants, Spacelift’s guide, 20 Best AI-Powered Coding Assistant Tools in 2025, offers broad coverage backed by workflow examples.

Where Qodo AI Outperforms (and Where It Doesn’t)

Where Qodo wins:

- Best fit for teams with complex, changing codebases.

- Top value in group review cycles, onboarding, and catching risky changes early.

- Actual prioritization of issues, not just a laundry list of flagged lines.

Where the competition still holds advantages:

- Copilot and Tabnine remain faster for snippet completions and off-the-cuff code writing.

- Budget-conscious solo devs may opt for Codeium as a no-cost assistant.

- Qodo’s learning curve and full strengths show best in established projects, not brand-new repos.

For deeper matchups, you can always continue your research with expert-driven comparisons of the best AI coding assistants in 2025.

Overall, Qodo AI gets my 8 out of 10 rating—great where review quality matters, unbeatable for teams, and still catching up in some instant coding cases. For fast-moving dev shops, it might just be the teammate you’re missing.

Conclusion

After weeks of hands-on testing, my frank take is that Qodo AI delivers real gains for teams who value speed, accuracy, and reliable code review context. Its smart bug detection, nuanced pull request support, and deep integration with team norms make it stand out from generic code assistants. I give Qodo AI a fair 8 out of 10 for its balance of usability, seamless integrations, review quality, security awareness, and overall value for money.

You’ll get the most out of Qodo AI if your workflow involves many pull requests, cross-team contributions, or complex business logic. Still, it’s not a full replacement for skilled human review—especially on brand-new projects or where unique domain knowledge shapes critical paths. Its strengths lie in catching real bugs, reducing bottlenecks, and turning code review into a team-strengthening step, not just another chore.

If your priorities match mine—clearer feedback, less busywork, honest risk alerts, and faster reviews—Qodo AI is easy to recommend for everyday team development. It’s worth exploring deeper side-by-side matchups with rivals and extra tips on picking the right platform in the dedicated best AI coding assistants in 2025 guide at AI Flow Review.

Tried Qodo AI yourself or have a workflow worth sharing? Drop your tips or questions below. For those searching for the next sharp upgrade to their code review stack, Qodo AI might just hit that perfect sweet spot. Thanks for sticking with this Qodo AI review—here’s to code that stays fast, sane, and clean.