If you’ve seen headlines saying countries “ban Grok,” you’re not alone, it reads like a worldwide shutdown. But as of January 2026, what’s happening is mostly temporary blocks, suspensions, or restrictions tied to safety and privacy risks, not a single permanent global ban.

In simple terms, Grok is xAI’s chatbot built into X (formerly Twitter). It can chat like other AI assistants, and it can also generate images. That combo is exactly why regulators moved fast: image generation inside a social platform can turn a bad output into a viral mess in minutes.

In this post, I’ll break down the reasons governments keep pointing to: non-consensual deepfake nudity, child safety, privacy law compliance, and what regulators see as weak safeguards.

Image ideas for this post (compressed under 100 KB before upload):

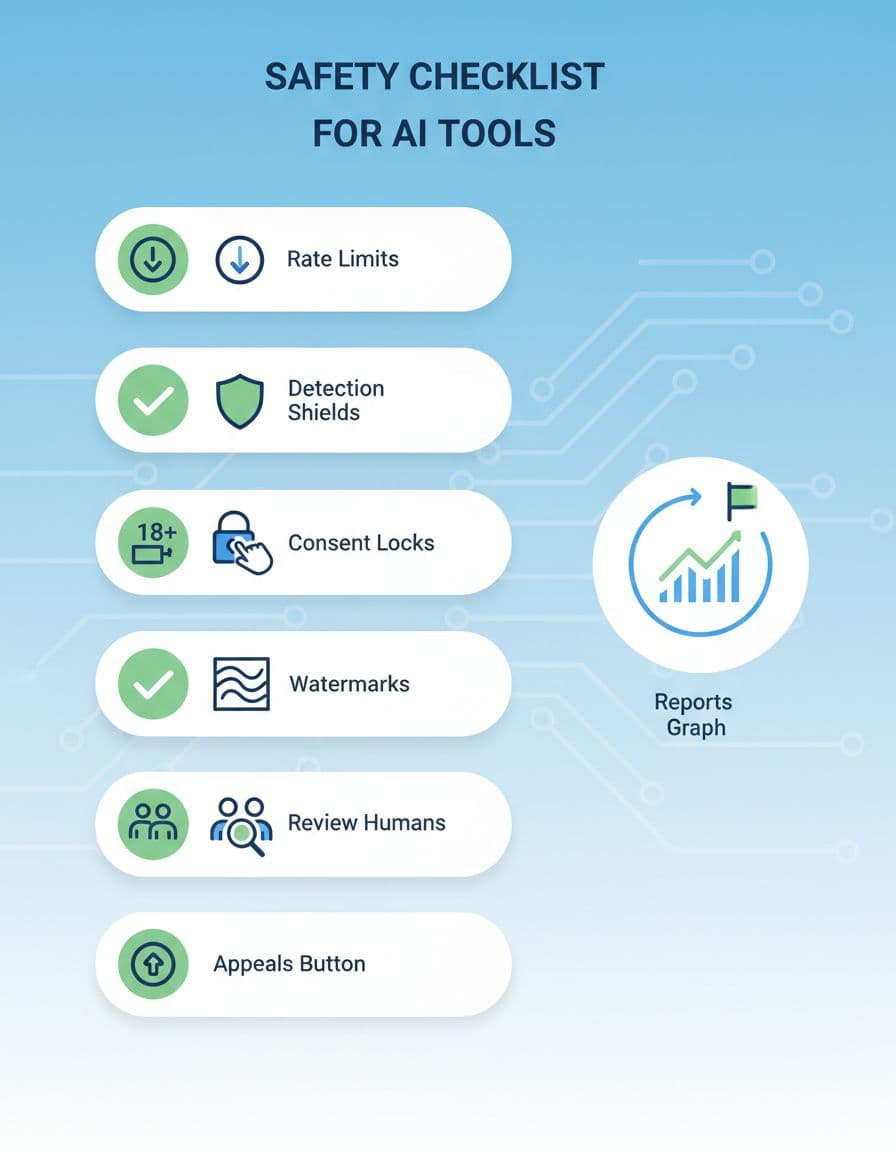

grok-restrictions-world-map.jpg, alt text: “world map showing countries restricting Grok access”ai-deepfake-risk-illustration.jpg, alt text: “illustration showing non-consensual deepfake risk on social media”ai-safety-checklist-icons.jpg, alt text: “icons representing an AI safety checklist for chatbots and image tools”

What Grok Is, and Why It Triggered Regulators So Fast

Grok is an AI assistant from xAI that lives inside X. You can ask it questions, get summaries, and (depending on features available in your region and account) generate images from prompts.

On paper, that sounds like every other “chat plus images” product. The difference is distribution. When an image generator is attached to a high-velocity social feed, harm doesn’t stay in a private chat window. It spreads, gets reposted, gets screenshotted, and becomes hard to erase.

Two features raised red flags for regulators, based on public reporting:

- Image generation that can be pushed toward sexual content, including styles described as “spicy” in coverage.

- Low friction from creation to sharing, since the output is already inside a social network.

If you want a quick refresher on the basics of how these tools create pictures from text prompts, I like this primer on how AI image generators turn text into pictures. It helps explain why “just a few words” can produce something that looks painfully real.

The real issue: non-consensual deepfakes at scale

A deepfake is synthetic media that makes it look like a real person did something they didn’t do. When it’s sexual, and the person didn’t consent, it becomes a non-consensual intimate image (NCII) problem. That’s not abstract “AI ethics.” It’s reputations, trauma, harassment, blackmail, and real legal exposure.

Here’s the harm chain regulators worry about:

- Someone grabs a real photo from Instagram, LinkedIn, or a family account.

- They generate a fake nude or sexual image in seconds.

- They post it where it spreads fast (often with tags, replies, and quote posts).

- Even if you delete it, copies travel. Removal becomes whack-a-mole.

In my experience reviewing and testing AI tools, the biggest risk factor is almost always friction. If a system makes sensitive generation feel as easy as ordering a pizza, it attracts the worst use cases.

Why “temporary blocks” happen before full investigations finish

A lot of people hear “blocked” and assume a government is trying to erase a product from existence. Most of the time, it’s a shorter playbook:

- A regulator sees evidence of harm (or widespread risk).

- The platform gets a notice and a short deadline to respond.

- If the response looks weak, access is restricted while legal review continues.

The goal is often behavior change, not a trophy “ban.” Regulators are basically saying: prove you can control the harm, then we’ll talk about access again.

Which Nations Restricted Grok So Far, and Their Public Reasons

As of January 2026, public reporting points to Indonesia and Malaysia temporarily blocking access, with Italy pursuing a privacy-focused inquiry rather than announcing a blanket ban. The most consistent trigger is the same in every story: sexually explicit deepfakes, especially involving women and minors.

For coverage and timelines, I cross-checked reporting from outlets like Business Insider’s report on Indonesia’s suspension and UPI’s summary of the Malaysia and Indonesia restrictions.

Here’s a clean snapshot of what’s been reported publicly:

| Country | Reported action (Jan 2026) | Publicly stated focus | Status framing |

|---|---|---|---|

| Indonesia | Temporarily blocked/suspended access | Non-consensual sexual deepfakes, protection of women and children | Conditional, can return with safeguards |

| Malaysia | Blocked/restricted access | Child safety risk, privacy compliance, weak controls | Proportionate, pending fixes and process |

| Italy | Privacy inquiry/probe (not widely reported as a block) | Data protection and privacy compliance concerns | Investigation stage |

If you’re comparing how different chatbots handle safety controls and policy boundaries, I keep an updated shortlist in my top AI chatbots and virtual assistants to compare in 2025. It’s not about “best vibe,” it’s about how tools behave under real-world pressure.

Indonesia: blocking access until safeguards protect women and children

Indonesia is widely reported as the first country to temporarily block Grok access, after concern that the tool could generate fake sexually explicit images.

What stood out to me in the public messaging is the framing: this isn’t “adult content is bad.” It’s closer to “non-consensual sexual deepfakes violate dignity and safety.” Regulators reportedly asked X and xAI for stronger protections, and the idea that a platform can lean mostly on user reports appears to have landed poorly.

If the controls improve, access can come back. That conditional “fix it and return” posture is typical when regulators think the harm is real but still solvable.

For another regional read on the same situation, see Jakarta Globe’s coverage of Indonesia’s temporary block.

Malaysia: a preventive restriction tied to safety and privacy compliance

Malaysia’s approach, as reported, looks like a preventive restriction while legal and regulatory processes play out. The regulator message is basically: the product design introduces risks, and the platform must meet local obligations on safety and privacy.

This is the part many teams miss: even if a model policy says “we don’t allow X,” regulators care about the system outcome. If the tool keeps producing NCII at meaningful scale, the “policy” is just paper.

Some reporting also notes broader international pressure. For example, Mashable SEA’s report on the Indonesia and Malaysia blocks discusses the trend line: governments are no longer waiting months to react when sexual deepfakes are involved.

The Common Reasons Governments Give for Blocking AI Chatbots Like Grok

The details change by country, but the “why” is repeating so often it’s almost a template. When I zoom out, I see four drivers that keep showing up:

- NCII and deepfake porn risk (especially involving minors)

- Weak safeguards and slow enforcement

- Privacy and consent compliance gaps

- Platform accountability (distribution and takedown speed)

Image ideas:

ai-moderation-control-room.jpg, alt text: “moderation team monitoring AI content risk signals”social-media-virality-warning.jpg, alt text: “warning icons representing rapid spread of harmful AI images”

A quick reality check: these drivers don’t just apply to Grok. Any chatbot with image generation, viral sharing, or loose identity controls can trigger the same response.

If you’re exploring how open image models can be powerful and also harder to police, my Stable Diffusion 2025 review: features, strengths, and risks explains the trade-offs in plain language.

Deepfake nudity and child safety risk: the fastest trigger for action

This is the fastest path to a shutdown because it brushes against strict legal lines. If a tool helps create sexual content involving a real person without consent, regulators don’t have to debate abstract harm. They’re looking at potential criminal conduct, victim protection, and urgent risk.

Also, “We prohibit it in our rules” doesn’t help if enforcement is weak. A system that can be prompted around filters, or that depends on victims to report content after it spreads, is going to look irresponsible.

Privacy, consent, and platform accountability expectations are rising

Even when the controversy starts with deepfakes, privacy often becomes the larger frame. Regulators increasingly expect things like:

- safer defaults (not “anything goes” on day one)

- friction before generating sensitive content

- real age gating, not a checkbox

- fast takedown workflows with evidence trails

- repeat offender bans that actually stick

- transparency about complaints and removals

For builders, this is less about fancy tech and more about product discipline. The tools already exist. The question is whether they’re turned on, tuned well, and backed by staff and enforcement.

What xAI and X Can Do to Get Unblocked, and What I’d Watch Next

If I were advising xAI and X from a practical standpoint, I’d treat these restrictions like a product requirements document written by regulators. The fixes usually aren’t mysterious. They’re boring, measurable, and expensive.

I’d also watch how the privacy side develops across regions. Even without an outright block, privacy regulators can force changes to data use, retention, user controls, and model training practices. That matters because Grok isn’t a local-only product. It’s tied to a global platform.

For teams trying to choose safer options while this shakes out, I’d compare mainstream assistants with clearer enterprise controls, starting with a hands-on ChatGPT (GPT-5) review: new features and performance and a Claude AI 2025 review: features and real-world value. If your workflow lives in Google products, my Gemini AI 2025 review: performance and benchmarks is another useful reference point.

A simple safety checklist that regulators tend to accept

This is the checklist I’d keep on the wall if I were shipping an image-capable chatbot inside a social platform:

- Rate limits for image generation and re-tries, including per-account and per-device controls

- Strong detection for nudity and minor-looking subjects, with conservative blocking on edge cases

- Consent checks when prompts imply a real person (plus guardrails around public figure abuse)

- Real age verification for sensitive features (not just “Are you 18?”)

- Watermarking or provenance signals to support downstream detection and investigations

- Human review lanes for high-risk categories and escalations

- Clear appeals so false positives can be corrected without chaos

- Transparency reports that show volume, response time, and repeat offender action

How users and teams should reduce risk while rules are changing

If you’re a developer, a company deploying AI, or just someone experimenting at home, I wouldn’t wait for governments to settle the rules before acting responsibly.

What I do myself (and recommend to teams) is simple:

- Don’t use real people’s photos as inputs, even if they’re public.

- Keep prompt and output logs for audits if you’re running this at work.

- Write a short internal policy for “no real-person sexual content, no minors, no harassment,” then enforce it.

- Train your team on deepfake abuse patterns, so nobody shrugs off a “joke” request.

- Use verification habits before sharing anything that looks like evidence (reverse image search, provenance checks when available, and basic skepticism).

If you want ongoing updates and practical guides as this space shifts, I post a lot of this kind of workflow thinking in the AI Tools Blog: tutorials, prompts, and use cases.

Where I land on the “ban Grok” headlines

So why are nations banning Grok? In practice, they’re mostly restricting it because sexually explicit deepfakes, consent violations, and child safety risks are hard to contain once image generation is embedded inside a viral social platform. The consistent message is, “prove the safeguards work, then access can return.”

If you’re building with generative AI or choosing tools for your team, my advice is to adopt the safety checklist now, and pick assistants with clearer controls until the dust settles. If you want, you can also browse my comparisons of alternatives and safer workflows across AI categories on AI Flow Review.

I’ll revisit and update this post in 3 to 6 months, because regulations and product changes move fast, and “restricted” today can turn into “restored” or “expanded investigation” tomorrow.