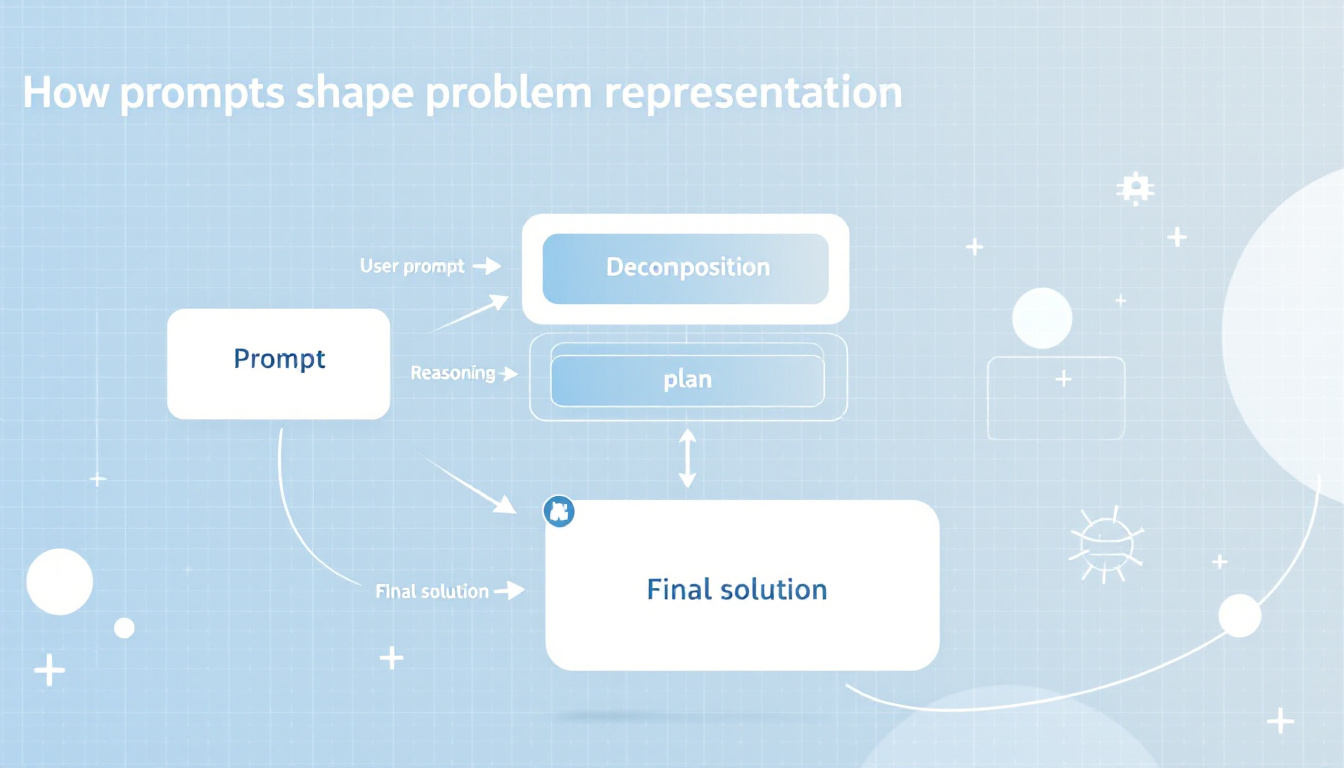

The process of shifting from a prompt to plan is central to advancing solution quality when working with AI models or formal reasoning systems. A prompt, defined as the specific instruction or input provided to a model, shapes the direction and scope of the response. In contrast, problem representation refers to the manner in which the key elements, constraints, and objectives of a problem are restructured and formalized within the system.

Adopting a plan-based approach in prompting has been shown to alter the model’s internal representation of complex problems by fragmenting the task into logical, sequential subparts. Changes in how prompts are constructed can lead to significant variance in reasoning accuracy, transparency, and the minimization of omitted steps. This principle holds particular relevance in domains requiring high construct validity and rigorous analytic decomposition, including clinical case analysis and mathematical problem solving.

Recent empirical studies demonstrate that prompting strategies, such as plan-and-solve and recursive decomposition, encourage more accurate stepwise reasoning and enhanced reliability. The subsequent sections will systematically examine problem breakdown methods, advanced prompting techniques, and their measurable effects on the fidelity of model-generated solutions. For context on evolving AI capabilities in handling complex prompts, see the Claude Review 2025 summary.

Understanding How Prompts Shape Problem Representation

In any setting where structured problem solving is required, the initial framing of a task exerts clear influence on its eventual decomposition and solution path. Both human cognition and artificial intelligence respond not only to the explicit content of an input, but also to its formulation: the words, structure, and constraints that define the scope of the request. This shaping process determines which details receive focus, which are abstracted, and how the entire problem is mapped across cognitive or algorithmic stages.

Defining the Prompt’s Role in Perception

A prompt, in this context, constitutes the guiding instruction that activates specific mental or computational pathways. For humans, prompts shape internal schema, triggering relevant frameworks or prior knowledge. For AI models, prompts serve as input vectors that align model attention and search strategies with certain data patterns or operational routines. Prompt variation can therefore alter the trajectory by which a model, or a person, approaches the construction of a solution.

Careful selection or engineering of prompts frequently determines whether important facets are considered or neglected. For example, a prompt that asks a student to “solve for x” will yield a narrower path of engagement compared to one that specifies “explain the steps to solve for x and provide a justification for each.” This demonstrates how altered prompts serve as levers to adjust depth and scope in problem processing, aligning with findings on prompt engineering effectiveness found in AI Prompt Engineering Examples, Tactics, & Techniques.

The Process of Problem Representation

Problem representation is the transformation of an initial challenge into a defined map of elements, constraints, and intended outcomes. In cognitive science, this process involves encoding relevant details, sequencing actions, and distinguishing between core and peripheral tasks. Within AI, similar conversion occurs as input data is parsed and reconstructed into internal graphs, sequences, or logic modules.

Constructing an effective problem representation relies heavily on the original prompt. A prompt containing multiple constraints will give rise to a more detailed problem space, with branching pathways or modular sub-tasks. By contrast, a vague or under-specified prompt may produce representations with missing nodes or ambiguous transitions. Table 1 illustrates this contrast in both human and AI contexts:

| Prompt Type | Example | Resulting Representation |

|---|---|---|

| Simple directive | “List three causes of WWI.” | Linear list; surface-level enumeration |

| Structured analytic | “Explain three causes of WWI and compare their long-term impacts.” | Mapped network; causal links and comparisons identified |

For detailed guidance on when to employ different forms of prompting in workflow or agent design, refer to A Practical Guide to Choosing Prompts, Workflows, or Agents.

Guiding Problem Breakdown Through Prompt Construction

The phrasing, context, and specificity of a prompt guide the systematic breakdown of a problem into actionable components. In everyday terms, consider the difference between asking an AI to “Write a summary of this article” versus “Identify five key findings from this article and suggest implications for practice.” The first request produces a broad surface summary; the second induces stepwise extraction, categorization, and reasoned extension, leading to a richer and more structured plan.

Empirical results show that incrementally structured prompts encourage both transparent reasoning steps and higher answer accuracy, especially on complex tasks where omission of a single parameter can result in cumulative error. For more on the role of prompts in shaping both human and AI engagement with problem spaces, see Problem Representation in AI: Attributes and Techniques.

The strategic construction of prompts not only initiates the movement from problem to solution, but also establishes the scaffolding upon which logical operations, justification, and assessment are built. Variations in prompt design shift the initial data encoding and, by extension, the completeness and fidelity of the resulting plan.

From Prompts to Plans: Steps in Structured Problem Solving

The movement from an initial prompt toward a fully-formed plan exemplifies the operational core of stepwise problem solving in both human and AI contexts. Converting a loosely-framed question into an explicit plan serves to reduce ambiguity, prioritize information, and expose latent dependencies among subtasks. Techniques like plan-and-solve separate planning from execution. This decreases the risk of skipped steps, errors, and incomplete reasoning by first outlining a logical sequence before any action.

For AI systems, the adoption of advance planning—as opposed to direct, single-pass answering—yields measurable improvements in response accuracy and traceability, as documented by recent analyses of large language model performance. For greater depth on methodologies, the Plan-and-Solve Prompting overview systematically details best practices and results in both experimental and production settings.

Examples of Effective Stepwise Prompting

To illustrate the practical effects of stepwise prompting, two contrasting scenarios clarify its utility. The first draws from ordinary human problem solving; the second reflects stepwise AI model completion. Both demonstrate that mapping a clear intermediate plan before taking action produces more complete, reliable results.

Everyday Human Example:

Prompt: “Plan a weekly grocery run for a healthy diet.”

Stepwise Plan:- Identify dietary requirements (e.g., vegetarian, low-carb).

- List food groups and quantities to meet needs (vegetables, grains, proteins, etc.).

- Check current pantry and refrigerator stock.

- Create categorized shopping list (produce, dairy, dry goods).

- Schedule optimal shopping time and route.

This granular planning approach allows constraints (health goals, inventory, logistics) to surface early, minimizing the risk of missing essentials or overspending.

AI Model Example:

Prompt: “Summarize and evaluate the key findings in this scientific paper.”

Stepwise Plan (AI guided):- Extract the research question and objectives.

- Identify main methods and data sources.

- Summarize major results.

- List stated conclusions.

- Critically assess strengths and limitations.

By compelling the AI to articulate a plan before generating text, users can confirm that critical analytical criteria (method validity, result context) will not be omitted. For an expanded methodology guide, see this breakdown of plan-and-solve prompting techniques.

In both cases, separating the planning process from execution results in higher-fidelity problem representations and more reliable outcomes, as compared to unstructured or single-pass task completion.

Advanced Prompting Techniques That Reshape Problems

More complex problems often require advanced structures that actively reshape representations beyond simple sequential planning. Methods like Tree-of-Thought and Recursion-of-Thought prompting exemplify this trend by formalizing iterative and branching approaches.

- Tree-of-Thought Prompting:

This technique compels an AI model to produce a branching set of intermediate solutions or ideas at each decision node, rather than a single sequence. Each branch represents an alternate strategy or subsolution, collectively forming a tree. By exploring several possible pathways, the model avoids premature narrowing of the solution space and is less likely to miss edge cases. - Recursion-of-Thought Prompting:

Here, the AI is prompted to recursively revisit its reasoning or proposed plans after each major step or upon encountering impasses. At each recursion, the model reevaluates its progress, adjusts for missed details, and iterates planning or solution-generation with newly incorporated insights. This recursive loop mimics forms of human metacognition, enhancing the accuracy, depth, and robustness of problem representation.

Both techniques fundamentally shift the nature of problem representation from flat or linear to multidimensional or adaptive. They encourage models to consider not just how to solve, but how many distinct approaches exist and which are most likely to yield high-validity solutions. The measured application of these frameworks supports improved error detection, transparency, and breadth of analysis in both human and machine-aided reasoning. A technical review on balancing context and keywords in AI prompts provides additional reference on constructing effective, nuanced prompt architectures that align with advanced stepwise techniques.

Why Better Prompts Lead to Better Solutions

Photo credit: Image generated by AI

Photo credit: Image generated by AI

The link between prompt quality and solution quality has been firmly established in research examining both computational models and applied domains such as education, management, and design. Precise and structured prompts not only guide AI algorithms to more reliable outputs, but also condition human problem-solvers—students, analysts, teams—to frame, decompose, and solve problems more methodically. The specificity, clarity, and depth of the prompt serve as latent scaffolds, influencing the completeness, accuracy, and replicability of resulting solutions. This section analyzes the mechanisms by which refined prompts enable higher standards of reasoning and outcome.

Mechanisms of Improved Problem Representation

The structure of a prompt directs attention toward relevant variables, defines boundaries, and sets expectations for response quality. When a prompt incorporates explicit instructions, constraints, or evaluation criteria, it signals to the solver which facets of the problem require formal consideration. For example, prompts that mandate a comparison, justification, or sequencing of actions encourage the solver to build layered cognitive models, exposing hidden relationships and dependencies among problem elements.

Empirical analyses of educational settings confirm that clear, focused prompts enhance student engagement with core concepts, reduce extraneous cognitive load, and yield more comprehensive written and oral responses. Similarly, in AI-assisted workflows, prompts specifying distinct steps or desired logical patterns result in output that more closely aligns with expert-derived standards of accuracy and traceability. For more on prompting frameworks and their effects, consult the top prompting techniques that detail practical methods validated across diverse tasks.

Reduction of Ambiguity and Error Propagation

Poorly constructed prompts often leave key parameters underdefined, introducing ambiguity that cascades through the reasoning process. This not only increases the risk of omitted steps but also promotes error propagation, as initial misunderstandings distort all subsequent actions. By contrast, well-formulated prompts operate as boundary conditions in mathematical systems—partitioning the problem space, constraining solution forms, and enabling the use of confirmatory processes to check each stage of work.

Quantitative evaluations in AI development have shown that techniques such as chain-of-thought prompting or role-prompting can reduce off-target responses and omission rates by substantial margins (Prompt engineering techniques). In collective settings, robust prompts standardize interactions within teams, minimizing interpretative discrepancies and ensuring that all members operate from a harmonized problem schema.

Facilitation of Stepwise Planning and Meta-Reasoning

A refined prompt often requires the solver to construct a plan of action before proceeding to execution. This delay in immediate response creation allows for the activation of metacognitive skills: identifying assumptions, projecting intermediate outcomes, and preemptively detecting potential obstacles. Such anticipatory planning aligns with findings in cognitive psychology regarding the importance of explicit goal-setting and structured rehearsal in complex problem-solving.

In the context of AI, this is operationalized through sequential or recursive prompting schemas that force the model to lay out reasoning chains or to reassess interim answers. The resultant solutions are more resilient to logical breakdowns and often exhibit greater compliance with task-specific validity criteria. For human teams, similar effects are observed in settings that employ checklists or diagnostic frameworks as prompting tools.

To explore technical strategies and implementation details, readers may reference Azure’s prompt engineering techniques and structured guides evaluated in applied cognitive science research.

Impact Across Human, Team, and Automated Contexts

The beneficial effects of improved prompting requisites are not limited to computational models. Educational experiments and field studies document parallel gains among students and professional teams when prompts facilitate analytical decomposition, explicit hypothesis formation, or systematic review. In organizational settings, prompt quality dictates not only the speed and reliability of generated solutions but also the capacity for error identification and long-term learning.

Consider the following table, which synthesizes evidence from research on individual, group, and AI settings:

| Prompt Quality | Human Output | Team Collaboration | AI Output |

|---|---|---|---|

| Generic/Vague | Surface-level responses | Uncoordinated efforts | Incomplete or scattered output |

| Focused/Structured | Deep analysis, stepwise logic | Synchronized workflows | Accurate, consistent answers |

| Process-Oriented | Planned problem-solving | Shared frameworks | Stepwise, traceable logic |

As this table demonstrates, advancements in prompt specificity and structure consistently yield system-wide improvements in accuracy, coordination, and knowledge retention.

Practical Techniques for Enhancing Prompt Quality

Adopting the following practical approaches can help optimize problem representation and boost solution quality in both human and AI-centred task settings:

- Clearly define objectives and expected outcomes in the prompt.

- Specify constraints, criteria, or desired steps to guide solution development.

- Use worked examples, especially in training or educational settings.

- Incorporate process-awareness or role-based cues (such as prompting the respondent to think like a particular expert).

- Encourage explicit articulation of assumptions and intermediate reasoning.

To facilitate practical adoption, actionable guides on prompt refinement such as the Prompt Engineering Techniques overview and AI specialist breakdowns on prompt engineering best practices provide stepwise methodologies that have demonstrated consistent effectiveness.

For readers seeking a comprehensive synthesis of established prompting methods with application-based evidence, the essential guide on top prompting techniques consolidates research-backed recommendations across multiple domains.

Conclusion

The transformation from prompt to plan establishes the structure of problem solving by determining both the encoding of key elements and the progression of reasoning. The design of a prompt controls which constraints are surfaced, how steps are sequenced, and whether critical logic is applied. When prompts are precise and structured, models and people alike achieve more valid, complete solutions. This holds both in algorithmic systems and in collective or individual human work.

The practical value lies in directly experimenting with prompt construction, refining instructions until both the problem representation and solution pathway are transparent and comprehensive. Readers aiming to strengthen their application of these methods should continue to examine documented guides and experiment with new strategies for prompt engineering. Thank you for your attention and engagement with this important subject. Share your insights or observations for future discussion.